The curious case of LM repetition

# January 22, 2024

I was doing some OSS benchmarking over the weekend and was running into an odd issue. Some families of models would respond with near-gibberish, even with straightforward prompt inputs.

Given the low reported perplexity, I thought there had to be an issue. I refactored the core logic into a new notebook to isolate the extraneous factors. Here's the basic code:

model_dtype = torch.float32

device_map = "auto"

device = "cuda"

text = "Here's a recipe for baking chocolate chip cookies: Start by"

from transformers import AutoConfig, AutoModelForCausalLM, AutoTokenizer, TextStreamer

import torch

config = AutoConfig.from_pretrained(name_or_path)

tokenizer = AutoTokenizer.from_pretrained(name_or_path)

model = AutoModelForCausalLM.from_pretrained(

name_or_path,

config=config,

torch_dtype=model_dtype,

device_map=device_map

)

model.eval()

model.to(device)

model.to(model_dtype)

if tokenizer.pad_token_id is None:

print("Setting pad token to EOS")

tokenizer.pad_token = tokenizer.eos_token

def generate_output(encoded_batch):

with torch.no_grad():

return model.generate(

input_ids=encoded_batch['input_ids'],

attention_mask=encoded_batch['attention_mask'],

max_new_tokens=250,

temperature=1.0,

top_p=0.95,

top_k=50,

repetition_penalty=1.0,

no_repeat_ngram_size=0.0,

use_cache=True,

do_sample=True,

eos_token_id=tokenizer.eos_token_id,

pad_token_id=tokenizer.pad_token_id

)

batch = [text]

encoded_batch = {

key: value.to(model.device)

for key, value in tokenizer(batch, return_tensors='pt', padding=True).items()

}

encoded_output = generate_output(encoded_batch)

decoded_output = tokenizer.batch_decode(encoded_output, skip_special_tokens=True)

print("[prompt]", text)

print("[response]", decoded_output)

Couldn't be simpler, right? Load the model, tokenize the input, and generate with some pretty common sampling defaults.

Still saw the same issue. This is the raw output (all warning messages included):

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

Loading checkpoint shards: 100% 2/2 [00:35<00:00, 16.18s/it]

Setting pad token to EOS

[prompt] Here's a recipe for baking chocolate chip cookies: Start by

[response] putting a big pot pot pot pot pot pot pot pot pot pot cookie cookies

cookies cookies cookies cookies cookies cookie cookies cookies cookies cookies

chocolate chip cookie cookies chocolate chip cookies cookie chocolate chip cookie

cookie cookie cookie cookies cookie cookies cookie cookie chocolate chip cookie

cookies chocolate chip cookie cookie cookie chocolate chip cookie cookie cookie

cookie cookies cookies chocolate chip cookie cookie cookie chocolate chip cookie

cookie cookie cookie cookie chocolate chip cookie cookie cookies cookie cookie

cookies cookie cookie cookies cookie chocolate chip cookie cookie chocolate chip

cookie cookie cookie cookie cookies chocolate chip cookie cookie cookie cookie

chocolate chip cookie cookie cookie chocolate chip cookie cookie cookie ...

I like cookies. But not this much. Here were my theories and what I checked along the way.

Finetuned model format

Especially in RLHF finetuned models, training inputs will be normalized to a common prompt format. This helps to guarantee a consistency between "user" text and expected output, while allowing users to specify different prompting techniques at the system level. If you've used hosted LLMs (OpenAI, Anthropic, etc.) this mirrors their conversation creation APIs. It's what they're actually doing behind the scenes.

There are varying formats that have become defacto standards. Two of the most popular are chatml and the alpaca format.

chatml:

<|im_start|>system

You are an intelligent agent.<|im_end|>

<|im_start|>user

How are you?<|im_end|>

<|im_start|>assistant

Great! How are you?<|im_end|>

Alpaca format:

### Instruction:

You are an intelligent agent.

### Input:

How are you?

### Response:

Great! How are you?

If you're violating the prompt format that the model was finetuned on, is it possible the results will be gibberish? I doubted it, but consulted the documentation again for these models. I confirmed they were all pure pretrained models, so they weren't trained with any specific prompting format. Just for kicks, I also tried to reformat the prompt data with these common formats. Same outcome.

Next theory.

Top p, top k, and all the other generation parameters

There are a lot of sampling parameters that configure how the sample selection works when the model is auto-regressively sampling. I tried to run a hyperparam sweep on top_p, top_k, repetition_penalty and no_repeat_ngram_size. Still no dice. Results basically look the same regardless of the top_p and to_k values.

The repetition parameters did more to vary the output and get rid of the explicit repetition. They're deterministically lowering the probability of sampling certain ngrams, so this was no surprise. Unfortunately the responses were still unintelligible:

[prompt] Here's a recipe for baking chocolate chip cookies: Start by

[response] melting some chocolate chips in the oven at 375°F. While they are

baking baking, bake. Let's'ss's the the some some start start baking bake bake

baking start.. baking cookie cookie cookies cookies cookie. cookie chocolate.

chocolate cookie chips cookies baking. Let'' start Some Some..".'Let's start bake.

bake let Let Let. Some Let some.Some Let let let. let some Some SOME.Lets.StartsSome

Some Cookie.'Start baking cookies' start cookie baking'CookCook'. Start..'Start Some

Start Some 'Let'Lettingting'tughtughtrichtricht. '.. 'Some. Cookie Cookie cookie

Cookie SomeSomeCookieCookie Cookie Chocolate Chocolate cookie ChocolateCookie

Chocolate Cookie..... Lets '. ChocolateChip cookie some Cookie chocolate Chocolate

Chip Chip.." 'Cookie'Cookie'SomeCookieChipChipchipCookie.Cookie

'.CookiecookiecookieCookie.Chocolate.'.'..Cook Cook.Choc.chocococoocoCook.

CookieCook.chocolate..cook.Cook.'.' 'Cook'CookCook,Cook,Cook'Cookie' 'Cholololoolo,

'LoLo LoLoChocololLoLet'sStartStart.'

Once again, way too many cookies. The problem lay elsewhere.

Finding the culprit

From the get-go, I thought it was incredibly odd that some models worked just fine and some were displaying this strange repeating behavior. After these hyperparam sweeps turned up short, I took a step back and thought about the commonalities in the actual behavior.

The key observation was that the issue appeared to be segmented not by the model itself, but by the different family of models. Whether the models were 500M, 3B, or 7B it was an issue of family instead of the model size. Other families of the same side worked just fine.

This led me to two theories:

- Either the huggingface code implementing these families is broken. Possible, but unlikely given the relative popularity of some of these models.

- Some family specific parameters that are being disabled at the configuration level.

I parsed through the AutoConfig configuration parameters. Nothing jumped out. But then I thought, what else controls model logic? Throwing caution out the window, I enabled all the conservative flags: downloading fresh parameters for the model, clearing the cache directories, and trusting remote code.

Ding ding ding. We have a winner. The key was trust_remote_code=True.

config = AutoConfig.from_pretrained(name_or_path, trust_remote_code=True)

tokenizer = AutoTokenizer.from_pretrained(name_or_path, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(

name_or_path,

config=config,

torch_dtype=model_dtype,

device_map=device_map,

trust_remote_code=True,

)

[prompt] Here's a recipe for baking chocolate chip cookies: Start by

[response] combining 1 cup of softened butter with 1 ¼ cups of white sugar.

In a separate bowl, stir together 1 egg, 2 teaspoons of vanilla extract, and 1 ½ cups

all-purpose flour. If desired, you can toss in 1 teaspoon of cinnamon or ¼ teaspoon

each of salt and baking soda. The last ingredient you'll need is about a ¾ cup (11.4

ounces) of semisweet chocolate chips.

Dump the egg mixture into a large mixing bowl and add the butter and sugar, stirring

vigorously until they're combined. Add the dry mixture, along with the chocolate, to

the bowl. Now, using a spoon or spatula, fold the ingredients together until the

cookie dough is evenly mixed. After that, prepare two sheets of parchment paper.

Divide the dough into one-inch balls, then place the balls on the parchment. Take one

of the sheets and place it in the oven at 350 degrees for 8-9 minutes. While the

cookies bake, use another pan, grease it with olive oil, place six pieces of salmon,

season with sea salt, squeeze half of a lime over them, cover with plastic wrap,

refrigerate for about twenty minutes, bake it on each side for approximately 3 to 4

minutes

Okay, so what's going on here? My theory:

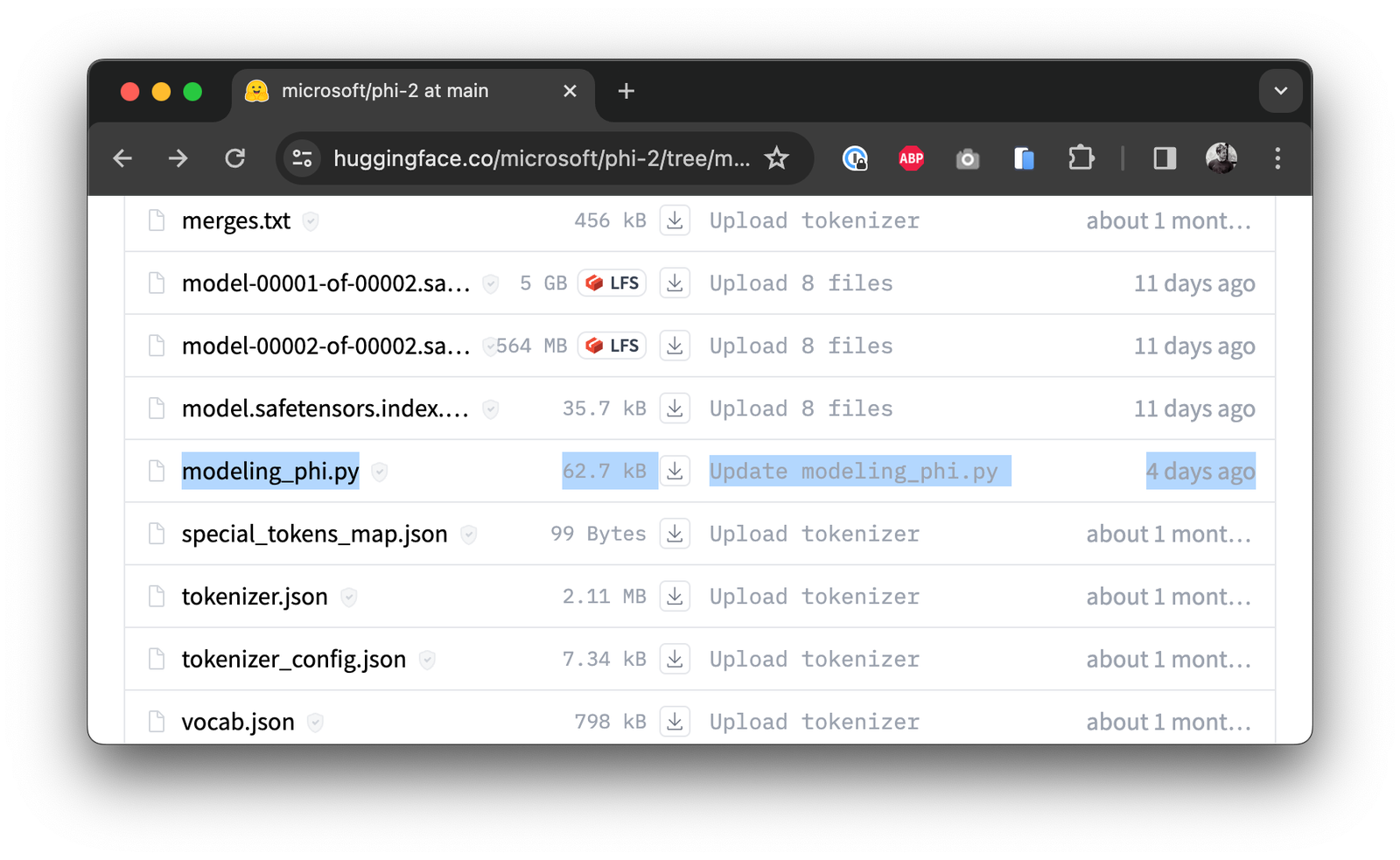

Before new model architectures are merged into the official transformers library, the only way to run them is with trust_remote_code=True. For these new models, code is typically published alongside the model weights within the huggingface model hub. You can see this most clearly in novel architectures that were published within the last few months:

When the model weights are downloaded, the code files are as well. Inference will execute this code in order to run the model. Hence the notion of remote-code. It's typically not ideal to allow arbitrary remote code execution, which is why this flag is off by default.

Architectures will then go through a review process and land in mainline transformers. After that, it's no longer required to trust remote code. If the model artifacts specify the architecture type (llama, phi, mpt, etc.) the transformers library will map the remote model weights to the local code implementation baked into transformers.

Now - what happened here? It seems that the transformers implementation has subtly skewed from its original source. It didn't skew enough to invalidate the weight files and cause a load error, which means that the parameters are loaded in according to specification. But the logic did skew enough to start generating incorrect outputs, at least with some artifact files.

Moral of the story: If you're in an isolated environment and can risk remote code execution, it's worth using the published artifacts to establish your baseline of model execution. This gives you the closest proxy to what was actually used to train the model. Everything else - including optimized code after the fact - might be better, but it's a hypothesis to test alongside with other performance guarantees.