Speeding up runpod

# December 18, 2023

Runpod.io is my favorite GPU provider right now for smaller experiments. They have pretty consistent availability of 4x/8x configurations with A100 80GB GPUs alongside some of the current generation nvidia chips.

One issue I've observed a few times now is varying runtime performance box-to-box. My working mental model of VMs is that you have full control of your allocation; if you've been granted 4 CPUs you get the ability to push 4 CPUs to the brink of capacity. Of course, the reality is a bit more murky depending on your underlying kernel and virtual machine manager, but usually this simple model works out fine.

On Runpod since any configuration less than requesting the full 8GPUs is multi-tenant, you might be competing with other workloads. A few times now I've observed sluggish performance on the box (batch preprocessing slow to complete, bash commands slow to enter, etc.)

My default when starting up new boxes has become:

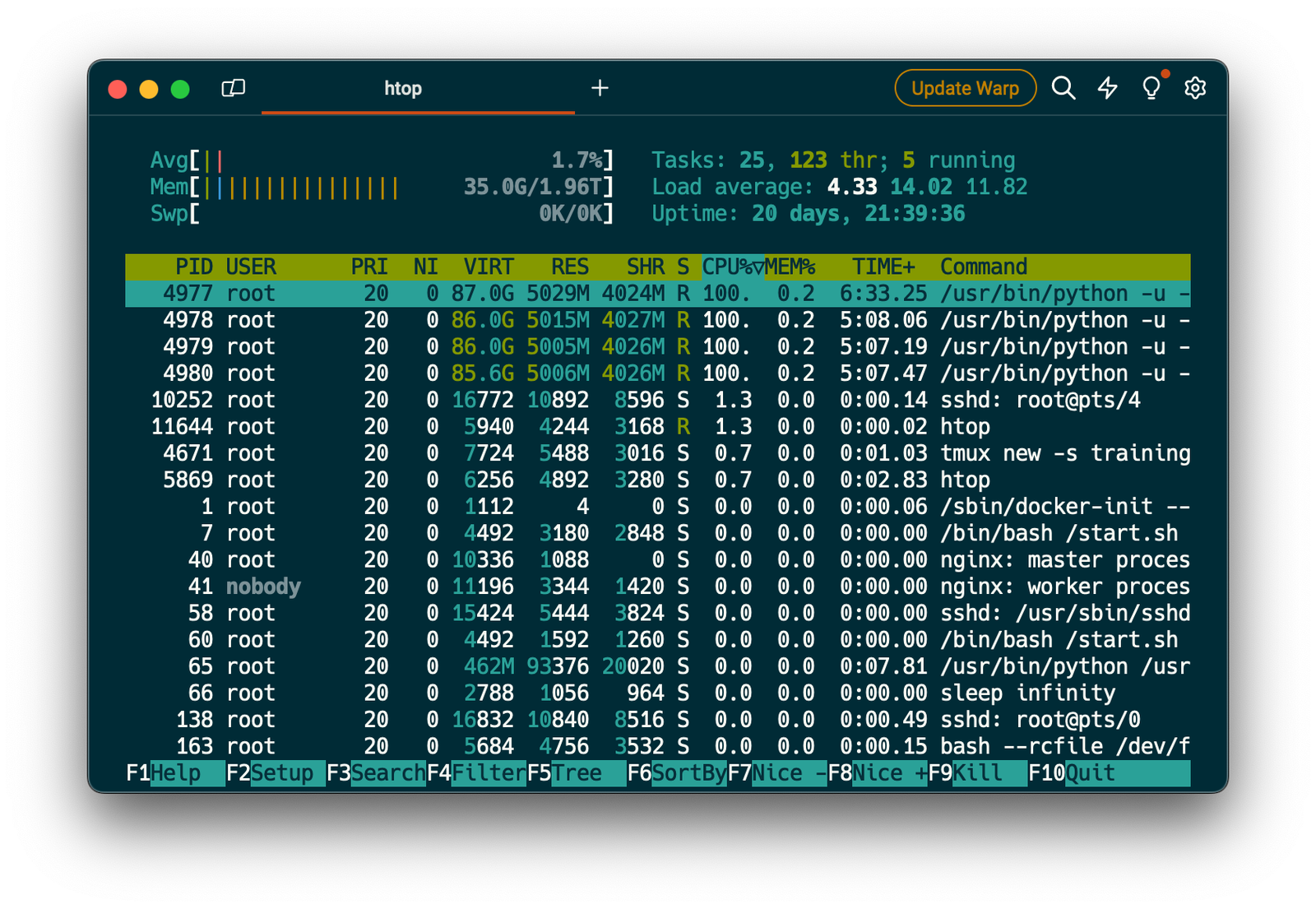

- Immediately install htop and check for the current server load. It should show you the "average" across both CPU and memory, which ends up being the total for the whole box.

- When the box is overloaded these numbers can both start creeping up to near 100% utilization of the CPU and memory. My guess is there's some default swapping that's allowed on the boxes, but that behavior results in slower than average performance at the limit.

- If you need fast network IO to external services or your local machine, make sure it's colocated in a similar region when you create the box.

If this happens to you and you need quicker processing:

- Consider using a

A100 SXM 80GBconfiguration. I've found the speed of these boxes and their availability to be higher than the stock A100 80GBs. - As a last resort, consider upgrading your GPU allocation as well. You'll get more CPUs and memory to go alongside the GPUs, which will also force their task allocator to place you on a box with less overall load.

Switching to a less loaded box has decreased some of my processing tasks from 3h+ to 10 minutes. It can make a world of difference if you're observing performance that's meaningfully slower than when you're doing development locally.