Manylinux and cross-compiling go wheels

# June 21, 2024

The core logic in Mountaineer is implemented in Rust for finer control over memory management and threading lifecycle. As of 0.3.0, it also ships an embedded version of esbuild for Typescript compilation into the Javascript files that are shipped alongside the webapp and used for server side rendering.1 esbuild however is a golang dependency, not a rust one, so we're layering a few separate FFI (foreign-function-interface) layers in order to support the full build pipeline.

Python <- Rust <- Golang

This particular integration leverages the stellar FFI of rust to build golang into C, and reference these C functions in native rust code. With a custom build.rs, this process becomes largely transparent to us during development. We can then use our existing rust compiler to create the wheels and headers for the python project.2

The initial release only supported linux distributions that had more modern versions of glibc. Per this issue, it required some workarounds to install Mountaineer properly on older versions like Ubuntu 20.04. I wanted to maximize the coverage of our wheels to host OSes, so users don't have to worry about the dependency pipeline.

Debugging wheels

The original issue report noticed the end behavior of not having a wheel installed - falling back to building from scratch, which requires rust, golang, and system development headers to be installed locally. Unless users are developing with that same stack the changes of naturally having these packages installed is slim. So the symptom is an error:

xxx

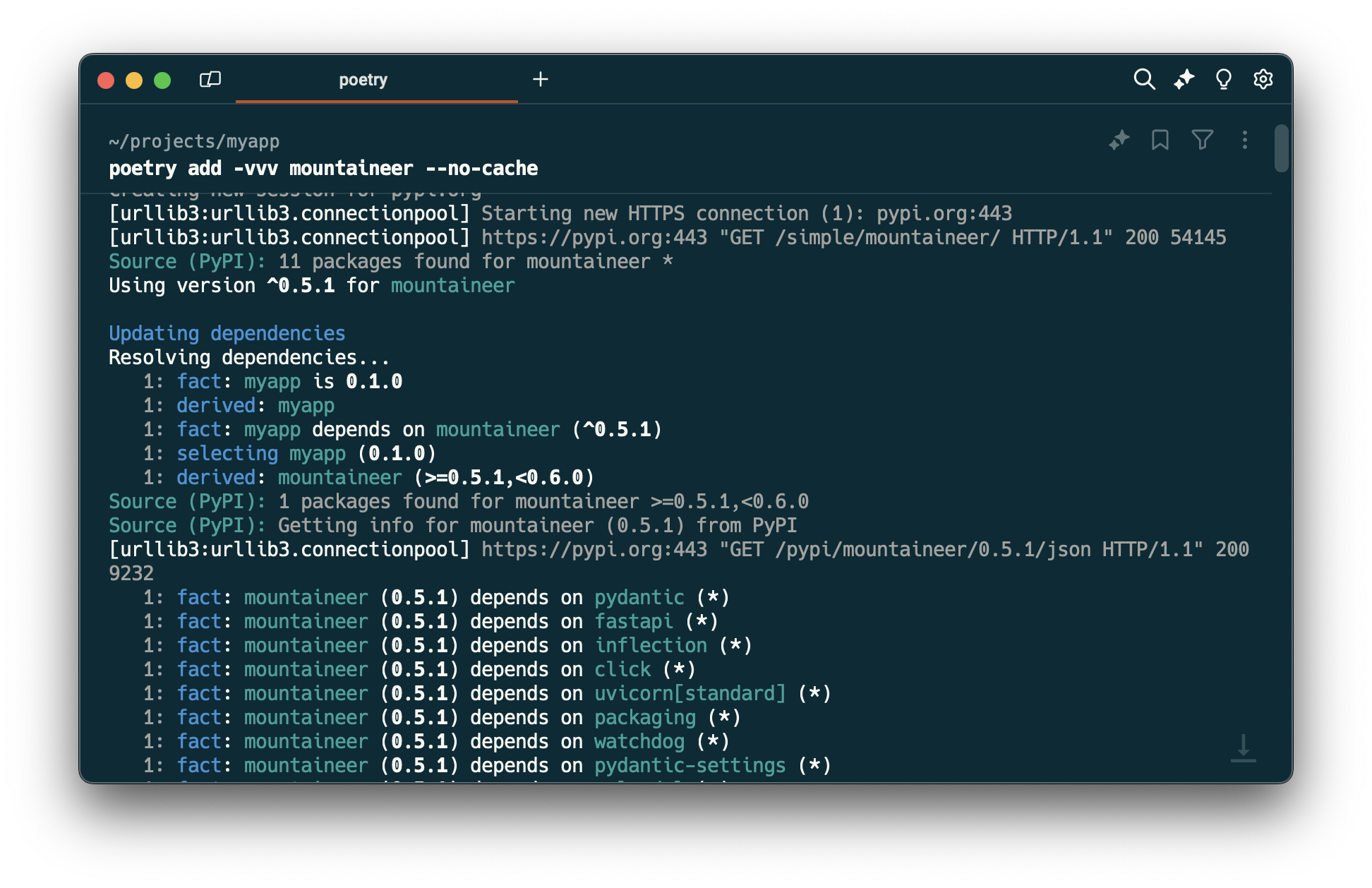

Anytime I see build errors when installing libraries that I know ship wheels, I double check why we're not pulling the wheels in the first place. Poetry makes it pretty easy to increase verbosity and log the level:

aaa

Wheels are packaged executable files that can be shipped alongside python packages. They contain logic from compiled languages instead of forcing code paths through the python interpreter bytecode.3

The correct wheel for your host is resolved by filename and filename alone. If a build parameter isn't supported in the file name, it won't be downloaded properly. The parameters themselves depend on the operating system. Consider the breakdown of the metadata stored in this wheel for macOS:

mountaineer-0.5.1-cp312-cp312-macosx_11_0_arm64.whl

mountaineer

[package name]

0.5.1

[version]

cp312-cp312

[python version]

macosx_11_0

[os type/version]

arm64

[CPU architecture]

This convention is similar but a little different on Linux:

mountaineer-0.5.1-cp312-cp312-manylinux_2_17_aarch64.manylinux2014_aarch64.whl

mountaineer

[package name]

0.5.1

[version]

cp312-cp312

[python version]

manylinux

[all linuxes distros]

2_17

[glibc version]

aarch64

[CPU architecture]

Wheels on linux only rely on two things: the CPU architecture platform and the linked version of glibc that it relies on.

glibc dependency

glibc is the core library package that is used by C, which has essential routines for a wide range of system calls. It's at the lowest level of what we expect out of languages - input/output processing, memory management, string/math operations, etc. Since C is a bare bones language by itself, glibc provides all the system specific bindings for the underlying language. CPython (the default python interpreter) is built off of these primitives. All wheels dynamically link glibc, so it assumes that an external shared glibc is available on the local system.

Wheels with an old glibc will work on all newer versions, but newer versions won't work with host operating systems that have older versions. You want to therefore build with the lowest version of glibc that you possibly can in order to maximize client compatibility. If clients violate any of the wheel conditions, pip will default to compiling all dependencies from scratch. This both takes time and is relatively demanding, since it assumes that rust, golang, and development headers are available on the local system.

With wheels, we want to build once for as many clients as we can.

Manylinux

The manylinux project provides docker containers that bundle old versions of glib to maximize compatibility. To properly support these outdated versions, they use a few different base images that were originally shipped with older glibc support. Ubuntu and CentOS are the two most common ones. When you want to build your wheel, you download these docker images, modify the environment to install any external packages that you need to, and build your project. Critically manylinux also provides cross-compilation utilities so you can still build the aarch64 instruction set even if you're running on a x86 machine and vice versa.

There are published containers for targeting a suite of different architectures on github. We're the most interested in x86 and aarch64 varieties since Intel and AMD chips cover the majority of server and development machines:

manylinux2014_x86_64manylinux2014_aarch64

These containers are not intended to run on different architecture hosts, they assume you're going to be running the compilation phase on an x86 runner4. Instead they internally have the right cross-compilation headers that allow the environment to compile a project with another architecture's headers. Doing so properly aligns the compiled header symbols and memory sizes to the supported values.

Higher level build pipelines like Maturin bake in manylinux support by default, so these lower level steps of manipulating the docker images are usually handled transparently to you.

Rust Builder

Mountaineer uses maturin's Github action plugin to coordinate builds across these different platform architectures. When building on Max and Windows, they work right on the host device. But for these linux dependencies where they have to build across multiple architectures, we can boot any Linux host operating system. For the build itself they pull from the manylinux images and run the build within a docker container.

One such image ghcr.io/rust-cross/manylinux2014-cross:aarch64.

Go Builder

Things get more complicated with Go, since you need to first compile the golang files into a static artifact and then link them to the proper architecture headers within the rust compiler. We'll then re-build the final rust package, which is the collection of logic from rust and golang together.

Go Installation

For compilation into a package that can actually be called by rust's FFI, you need to enable CGO. WHY?

export CGO_ENABLED=1

Without this flag you'll see misleading errors about "go: no Go source files". There are in fact golang source files, but it indicates some deeper issue about the incompatibility of the CC builder for the GCO.

x86 has better support for cross-compiling to aarch64, so it's best to use an Intel based runner. You'll want to install the amd64 version of go regardless of whether or not you're cross compiling.

wget -q https://go.dev/dl/go${GOVERSION}.linux-amd64.tar.gz

tar -C /usr/local -xzf go${GOVERSION}.linux-amd64.tar.gz

rm -f go${GOVERSION}.linux-amd64.tar.gz

# Set up Go environment variables

export GOROOT=/usr/local/go

export PATH=$PATH:$GOROOT/bin

Cross-Compilation

Cross compilation targets require a manual interface with the llvm cross compilation toolkit. You can customize the logic that the maturin github action runs on docker bootup with the before-script-linux argument. This is our main entrypoint to modify the docker environment to import our required build dependencies without forking the base image.

- name: build wheels

uses: PyO3/maturin-action@v1

with:

target: ${{ matrix.target }}

manylinux: ${{ matrix.manylinux || 'auto' }}

args: -vv --release --out dist --interpreter ${{ matrix.interpreter || '3.10 3.11 3.12' }}

rust-toolchain: stable

command: build

before-script-linux: |

...

The core of before-script-linux is installing the llvm or clang compilers:

# Determine distribution from /etc/os-release

DISTRO=$(grep ^ID= /etc/os-release | cut -d= -f2 | tr -d '"')

# Install necessary packages based on the distribution

if [ "$DISTRO" = "centos" ]; then

# CentOS specific package installation

yum -y install wget llvm-toolset-7 centos-release-scl

export PATH=/opt/rh/llvm-toolset-7/root/usr/bin:/opt/rh/llvm-toolset-7/root/usr/sbin:/opt/rh/devtoolset-10/root/usr/bin:$PATH

export LIBCLANG_PATH=/opt/rh/llvm-toolset-7/root/usr/lib64/

elif [ "$DISTRO" = "ubuntu" ]; then

# Ubuntu specific package installation

apt-get update

apt-get -y install wget clang

# For Ubuntu, the default location of libclang.so should be automatically detected

else

echo "Unsupported distribution: $DISTRO"

exit 1

fi

x86 base images use CentOS and aarch64 images use Ubuntu. You need to install the proper dependencies for both depending on the container. This finds the cross-compilation headers and making them exposable to the builder. There are different unique gnu/gcc versions depending on the architecture. Leaving the default ones will try to compile with the default host (x86) which is not what we want.

case "${{ matrix.target }}" in

x86_64) export GOARCH="amd64";;

aarch64)

export GOARCH="arm64";

export PATH=$PATH:/usr/aarch64-unknown-linux-gnu/bin;

aarch64-unknown-linux-gnu-gcc --version;

export CC=aarch64-unknown-linux-gnu-gcc;;

*) echo "Unsupported architecture: ${{ matrix.target }}"; exit 1;;

esac

echo "GOARCH=${GOARCH}"

GOVERSION="1.22.1"

Building for the eventual x86 target is done by default, so no build parameters need to be overridden.

For aarch64 targets, updating the GOARCH indicates that go should try to cross-compile with the expected headers. You also need to modify the path of the default CC builder to link with the gcc that's included specifically for aarch64 cross complication. This bin folder isn't in the default system path, so the golang toolkit won't be able to identify and call out to this version of gcc. Otherwise it will try to build your golang project with the default gcc, which will compile it for x86 instead of cross compiling for aarch64.

On build pipelines

The elusive dream of software for a long time was ubiquity. Regardless of chipset, regardless of manufacturer, code would be the layer of logic that was abstracted away from the physical. You'd specify what needs to be done - what is going to add the most value to users - and it would run where they need it to. This was the lofty dream that inspired vllm and early interpreters.

Now we're so close to that promised land. For the most part you don't have to think about cross-architecture differences.5 You write the code and your build pipeline takes are of the rest; perhaps that's a separate executable for each, perhaps that's some bytecode that can be transferred to a local VM. Rust's builder is so extensive that it has first-class support for the majority of architectures.

But still sometimes you have to dive into the weeds. I find this most commonly happens when you're interacting at the FFI level. Once you combine multiple compilers together (let alone roughly 3 in the case of the pipeline here), you're going to have to juggle compatible definitions.

But once you implement the build pipeline, it becomes largely set-it and forget-it. It becomes another primitive that you're building on top of. A unique layer of abstraction, less ubiquitous than vllm certainly, but a stable stack in its own right. When you take a step back and think about the average CI pipeline as doing something similar - combining primitives in unique ways to create something fully new and yet largely stable - it's quite remarkable every time.

-

Previously we required a system-wide installation of esbuild. The new wheels make it much easier to get started. You just need Mountaineer and nothing else. ↢

-

Compiling rust and golang into separate executables that could be merged into the wheel seemed like another good option, but that duplicates a lot of boilerplate within the wheel builder. ↢

-

Wheels come from the old python deployment format called "cheese". Wheels of cheese. How they relate to snakes I have no idea. 🧀 ↢

-

Or conceivably emulating the x86 instruction set on another architecture. ↢

-

In no small part due to the outcome of the architecture wars. AMD and Intel came out ahead by a mile. ↢