Debugging a mountaineer rendering segfault

# March 3, 2025

I had quite the morning debugging a segfault in the Mountaineer rendering stack last week. Here's a walkthrough of what was happening.

The silent error

As Saywhat has grown in popularity, I needed to upgrade our webhost cluster to larger instances (mostly for faster CPUs during peak load). Within our infrastructure this involved spinning up a new machine in terraform, bootstrapping it with our docker container, adding it to the load balancer, and then removing the old machines.

I started with a single machine canary to make sure everything was working as intended on the new config.

The new machine came up and looked healthy. Traefik was able to ping its healthcheck endpoint and started routing traffic on :80 to the new container. But as soon as we added it to the load balancer to start routing real traffic, it started crashing. Once the crash loop began it never stopped. The container would go down, come back up, and then crash again. But there were no explicit errors in the docker logs. The crash was totally silent.

I took the machine out of rotation and started investigating. Since the machine was healthy when not receiving traffic, I port mapped the VM to my local computer so I could start running real traffic against it1.

ssh -L 3000:localhost:3000 root@10.10.10.10

Since I isolated the traffic to my own machine I could see linearly what was going on. Like clockwork:

- GET /auth/login

- (enter credentials)

- POST /auth/login

- GET /

- (browser connection dropped & app crashes)

Still, there was no explicit error in the logs. I ran a docker events stream to try to catch any error in realtime and finally found my smoking gun:

2025-02-27T05:53:32.301603755Z container die

2549f85638f48c3c958ffa2374223301a43758067f821e9acea9e2aafb1d0d93

(execDuration=119, exitCode=139,

image=registry.digitalocean.com/mountaineer/amplify:30565ca807654eda6e77b874e233a282bab51be0,

name=amplify-web-30565ca807654eda6e77b874e233a282bab51be0,

role=web,

service=amplify,

traefik.http.middlewares.amplify-web-retry.retry.attempts=5,

traefik.http.middlewares.amplify-web-retry.retry.initialinterval=500ms,

traefik.http.routers.amplify-web.middlewares=amplify-web-retry@docker,

traefik.http.routers.amplify-web.priority=2,

traefik.http.routers.amplify-web.rule=PathPrefix(`/`),

traefik.http.services.amplify-web.loadbalancer.server.scheme=http)

The exitCode=139 was the key. It's a segfault.

Machine differences

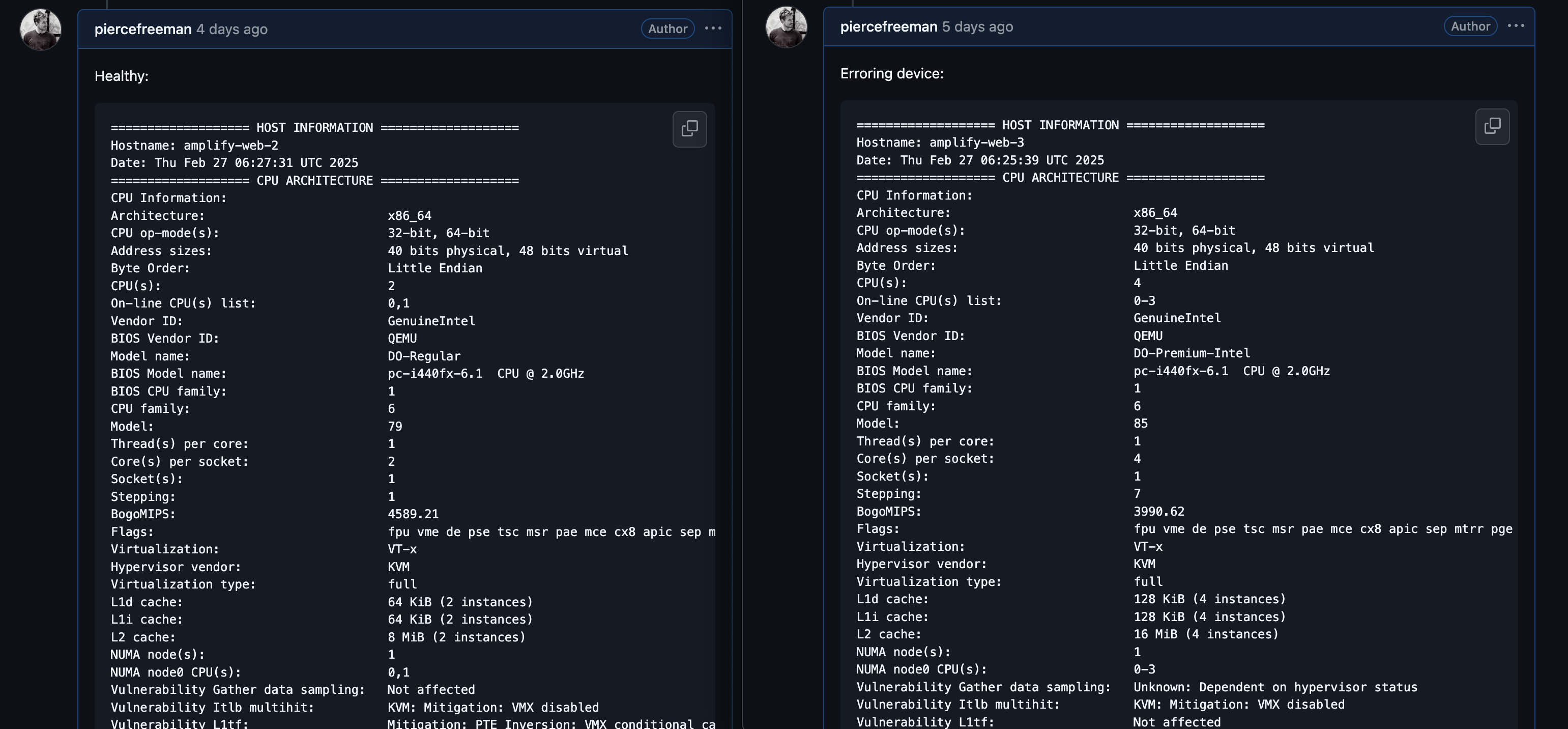

There obviously was something different between the old and new machines. Since our docker image was identical and docker was on the same version, I suspected it was something to do with the VM host architecture.

This is my go-to Gist to run a full echo of system configuration. It's been handy when I need to spin up a compatible VM to reproduce a user bug.

In this case, there were so many differences between CPU and hardware architecture that narrowing down one root cause from the hardware wasn't feasible from this log alone. Instead it was helpful to boot up a fresh VM and try to reproduce the issue from scratch.

Debugging with gdb

To get a bit more flexibility and rule out that Docker virtualization was causing our crash, I cloned the codebase outside of Docker. Thankfully it reproduced on the host as well:

./run_local.sh: line 7: 83648 Segmentation fault

RUST_BACKTRACE=1

MOUNTAINEER_LOG_LEVEL=DEBUG

poetry run uvicorn amplify.main:app

--host 0.0.0.0

--port 3000

--log-level warning

At this point I narrowed down the source of the issue to the mountaineer rendering pipeline. Specifically here:

# Convert to milliseconds for the rust worker

render_result = mountaineer_rs.render_ssr(

full_script, int(hard_timeout * 1000) if hard_timeout else 0

)

The webservice accepts the remote request, acquires the data dependencies, but fails when finally trying to run the server rendering. There's a lot that happens under the hood at this point in the pipeline: spinning up a new V8 isolate, compiling the script, etc. It was also the most likely place to segfault because of low-level C++ calls and differences in compiling for host architectures.

Running gdb at this stage wasn't likely to help much, since our released Mountaineer executable is compiled with heavy optimizations and symbols are stripped. I cloned Mountaineer and compiled it with debug symbols enabled, pointing our saywhat pyproject to the local wheel.

source $(poetry env info --path)/bin/activate

gdb --args python -m uvicorn amplify.main:app --host 0.0.0.0 --port 3000 --log-level warning

deactivate

Running run in gdb gave me our segfault trace:

[New Thread 0x7fff69e006c0 (LWP 134251)]

[Thread 0x7fff69e006c0 (LWP 134251) exited]

[New Thread 0x7fff69e006c0 (LWP 134252)]

[New Thread 0x7fff5cc006c0 (LWP 134253)]

[Thread 0x7fff69e006c0 (LWP 134252) exited]

[New Thread 0x7fff69e006c0 (LWP 134254)]

Thread 36 "python" received signal SIGSEGV, Segmentation fault.

[Switching to Thread 0x7fff69e006c0 (LWP 134254)]

0x00007ffff2f728b1 in v8::internal::FreeListManyCachedFastPathBase::Allocate(unsigned long, unsigned long*, v8::internal::AllocationOrigin) ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

(gdb)

The error was pretty deep in the stack trace, so expanding it gave me a bit more context:

(gdb) bt full

Thread 36 "python" received signal SIGSEGV, Segmentation fault.

[Switching to Thread 0x7fff69e006c0 (LWP 134254)]

0x00007ffff2f728b1 in v8::internal::FreeListManyCachedFastPathBase::Allocate(unsigned long, unsigned long*, v8::internal::AllocationOrigin) ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

(gdb) bt full

#0 0x00007ffff2f728b1 in v8::internal::FreeListManyCachedFastPathBase::Allocate(unsigned long, unsigned long*, v8::internal::AllocationOrigin) ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

No symbol table info available.

#1 0x00007ffff26adb8d in v8::internal::PagedSpaceAllocatorPolicy::TryAllocationFromFreeList(unsigned long, v8::internal::AllocationOrigin) ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

No symbol table info available.

#2 0x00007ffff26ae1b7 in v8::internal::PagedSpaceAllocatorPolicy::RefillLab(int, v8::internal::AllocationOrigin) ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

No symbol table info available.

#3 0x00007ffff26ad05d in v8::internal::MainAllocator::AllocateRawSlowUnaligned(int, v8::internal::AllocationOrigin) ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

No symbol table info available.

#4 0x00007ffff26854fa in v8::internal::HeapAllocator::AllocateRawWithLightRetrySlowPath(int, v8::internal::AllocationType, v8::internal::AllocationOrigin, v8::internal::AllocationAlignment) ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

No symbol table info available.

#5 0x00007ffff2686782 in v8::internal::HeapAllocator::AllocateRawWithRetryOrFailSlowPath(int, v8::internal::AllocationType, v8::internal::AllocationOrigin, v8::internal::AllocationAlignment) ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

No symbol table info available.

#6 0x00007ffff266b9f8 in v8::internal::Factory::CodeBuilder::BuildInternal(bool) ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

No symbol table info available.

#7 0x00007ffff266c18e in v8::internal::Factory::CodeBuilder::Build() ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

No symbol table info available.

#8 0x00007ffff2b46c1c in v8::internal::RegExpMacroAssemblerX64::GetCode(v8::internal::Handle<v8::internal::String>) ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

No symbol table info available.

#9 0x00007ffff2fc800c in v8::internal::RegExpCompiler::Assemble(v8::internal::Isolate*, v8::internal::RegExpMacroAssembler--Type <RET> for more, q to quit, c to continue without paging--

*, v8::internal::RegExpNode*, int, v8::internal::Handle<v8::internal::String>) ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

No symbol table info available.

#10 0x00007ffff29eab83 in v8::internal::RegExpImpl::Compile(v8::internal::Isolate*, v8::internal::Zone*, v8::internal::RegExpCompileData*, v8::base::Flags<v8::internal::RegExpFlag, int, int>, v8::internal::Handle<v8::internal::String>, v8::internal::Handle<v8::internal::String>, bool, unsigned int&) ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

No symbol table info available.

#11 0x00007ffff29ea365 in v8::internal::RegExpImpl::CompileIrregexp(v8::internal::Isolate*, v8::internal::Handle<v8::internal::IrRegExpData>, v8::internal::Handle<v8::internal::String>, bool) ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

No symbol table info available.

#12 0x00007ffff29e94c9 in v8::internal::RegExpImpl::IrregexpPrepare(v8::internal::Isolate*, v8::internal::Handle<v8::internal::IrRegExpData>, v8::internal::Handle<v8::internal::String>) ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

No symbol table info available.

#13 0x00007ffff29eb214 in v8::internal::RegExpGlobalCache::RegExpGlobalCache(v8::internal::Handle<v8::internal::RegExpData>, v8::internal::Handle<v8::internal::String>, v8::internal::Isolate*) ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

No symbol table info available.

#14 0x00007ffff2ffdaf8 in v8::internal::Runtime_RegExpExecMultiple(int, unsigned long*, v8::internal::Isolate*) ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

No symbol table info available.

#15 0x00007ffff2d5ad36 in Builtins_CEntry_Return1_ArgvOnStack_NoBuiltinExit ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

No symbol table info available.

#16 0x00007ffff2dec4c0 in Builtins_RegExpReplace ()

from /root/.cache/pypoetry/virtualenvs/amplify-piK6NfjA-py3.12/lib/python3.12/site-packages/mountaineer/mountaineer.abi3.so

No symbol table info available.

#17 0x00002dc23cf9d9c1 in ?? ()

The lowest level of Chromium is calling v8::internal::FreeListManyCachedFastPathBase::Allocate(unsigned long, unsigned long*, v8::internal::AllocationOrigin). Our crash represents a memory allocation failure: but is this because of corrupted memory or being out of memory?

The fact our memory allocation was caused by a regex operation gave me pause. There are many known regex vulnerabilities (catastrophic backtracking) that can cause infinite loops. They're common enough to deserve their own DDOS classification: ReDoS.

V8 isolates are goverened by pretty conservative default memory limits - was it possible some bug in our regex logic caused memory to spiral above this limit?

Two things disproved this hypothesis:

- When setting a gdb breakpoint on the

Allocatefunction, and then measuring system memory usage, it never exceeded even 100MB of overhead. - The specified error response for a V8 out-of-memory should be a SIGILL, but our crash was a full SIGSEGV.

So what else could the issue be? Corrupted memory - but where exactly to find it?

Looking at our full Rust implementation, you'll see that there are two codepaths for the rendering. One where we have no timeout for the rendering process and the other where we do:

if hard_timeout > 0 {

timeout::run_thread_with_timeout(

|| {

let js = Ssr::new(js_string, "SSR");

js.render_to_string(None)

},

Duration::from_millis(hard_timeout),

)

} else {

// Call inline, no timeout

let js = Ssr::new(js_string, "SSR");

js.render_to_string(None)

}

To enforce the timeout within Rust, we need to spawn our V8 isolate in a new thread and then hard terminate the thread during a timeout. Generally speaking threading while sharing memory is often a recipe for disaster. But we shouldn't be doing that here. We have proper mutexes around global singletons and we launch a separate V8 isolate per thread. The V8 platform singleton is guaranteed to be thread safe.

But still, threading added a possible complication. There have also been occasional reports in deno about stability of Chromium in threading contexts. I removed the threading/timeout codepath and recompiled. Low and behold, the segfault went away. Pages continued to render no matter how long I used the application.

So - our V8 context only segfaults when threading is involved. But only on specific machines. Our original webhost (~2CPUs) would run these thread timeouts just fine.

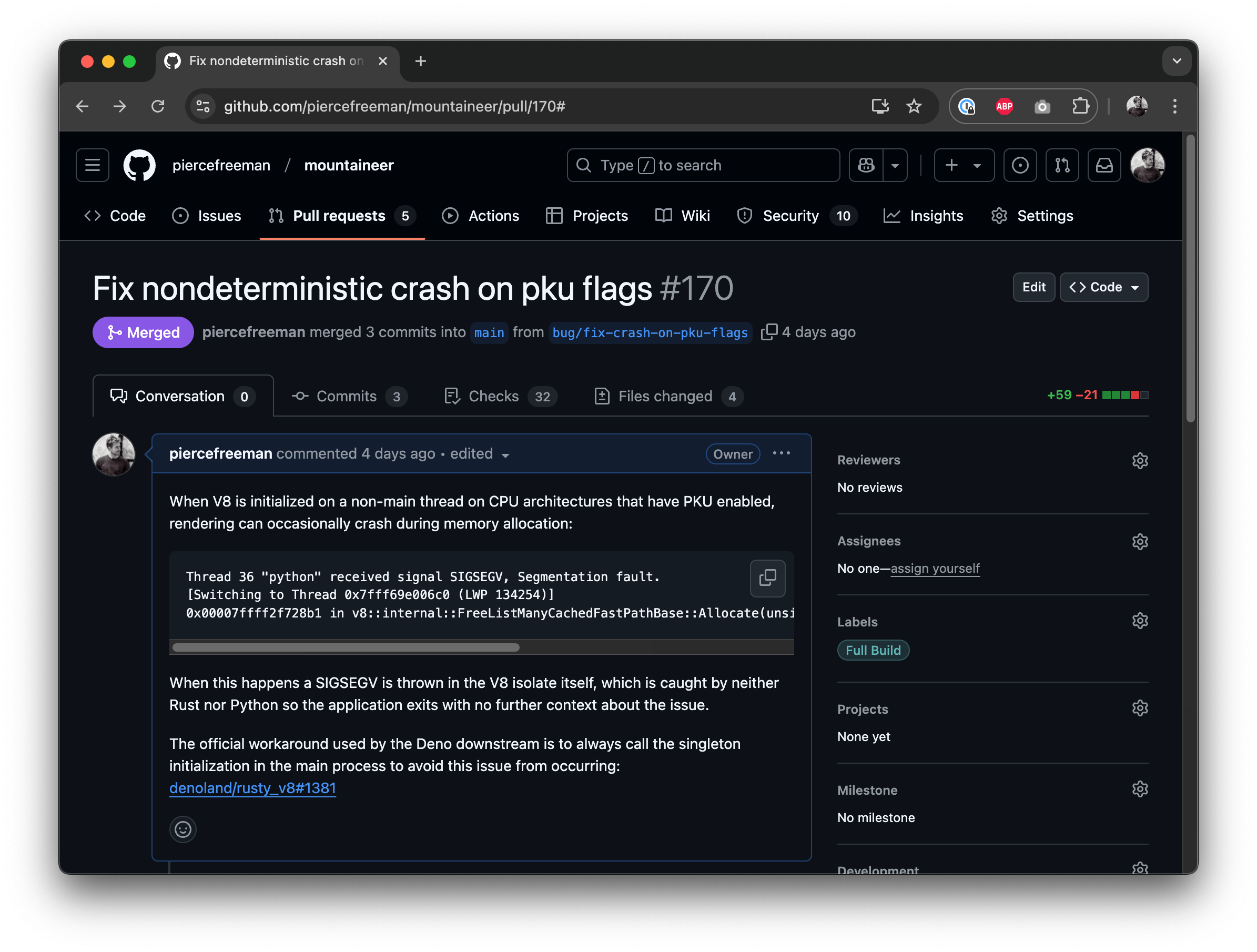

What specifically was different about the new machine? I did a deep dive into the backlog of Rusty V8 issues and found this one.

If your CPU has PKU feature you might need to use v8::new_unprotected_default_platform(). The issue is that if V8 isn't initialized on main thread and PKU is available it might lead to such crashes. Can you try to collect a Rust backtrace (RUST_BACKTRACE=1)?

We use a singleton wrapper lazy_static! to initialize our V8 platform:

lazy_static! {

static ref INIT_PLATFORM: () = {

// Include ICU data file.

// https://github.com/denoland/deno_core/blob/d8e13061571e587b92487d391861faa40bd84a6f/core/runtime/setup.rs#L21

v8::icu::set_common_data_73(deno_core_icudata::ICU_DATA).unwrap();

//Initialize a new V8 platform

let platform = v8::new_default_platform(0, false).make_shared();

v8::V8::initialize_platform(platform);

v8::V8::initialize();

};

}

lazy_static::initialize(&INIT_PLATFORM);

This platform initialization should only happen once per process. But in the Mountaineer renderer, this initialization would only happen when the Ssr class was first initialized. If the class was first initialized within the threading codepath, it would end up initializing the lazy_static! (and V8 platform) on that same secondary thread.

According to the issue, this could be a problem if the CPU has the PKU feature enabled. Low and behold, checking our earlier CPU comparison:

Flags: ...pku...

PKU was enabled on the new machine but not the old one. One sneaky CPU flag that caused this full saga.

I'm by no means an x86 expert, but my understanding is that the PKU flag enables support for PKU at the hardware level. It lets software partition memory into domains and change those access rights by updating the PKRU register.

I suspect Chromium sets up the PKRU register differently when running on the main thread vs secondary threads. Since security initialization typically happens on the main thread, it's possible it doesn't even touch PKRU on the secondary threads - leaving allocations to crash when they hit permission violations.

Fix

The full fix was simple. Only 59 LOC simple. We just had to initialize our lazy static on the main thread. Additional isolates could be spawned into separate threads just fine, so long as the platform owned memory in the main thread.

With that fix shipped, our new machine was happy. After bumping Mountaineer to the latest version, the new machine accepted traffic and rendered just fine.

-

Thanks for asking. No, this is not actually our prod IP. Would be really sweet if it were though. ↢